amd

NotOpodcast: Episode 6

Welcome to the sixth episode of NotOpodcast, where Michael and Anshel join me to discuss a variety of topics such as No Man's Sky, the latest in VR gaming, Anshel's experiences at VRLA (the Virtual Reality expo in Los Angeles), the new Samsung Galaxy Note7, the newest GPUs on the market, firearms in video games, and some basics of pistol mechanics.

The timecodes are:

Gaming - 1:00 - No Man's Sky, latest in VR gaming

Technology - 22:23 - VRLA (Virtual Reality: Los Angeles) Expo, Samsung Galaxy Note7, latest GPUs (Graphics Cards)

Firearms - 1:01:07 - Guns in Games, Basics of Pistol Mechanics

NotOpodcast: Episode 3

Welcome to the third episode of NotOpodcast, where Kilroy and Michael join me to discuss a variety of topics such the latest on E3 announcements from this week, physical phone keyboards, an in depth look at the upcoming Xbox Project Scorpio, the tactical triad, and ignorance about AR15s.

The timecodes are:

Gaming - 0:45 - E3, Sony, Microsoft, Nintendo

Technology - 35:23 - Physical vs Software Phone Keyboards, Xbox Project Scorpio Specs

Firearms - 54:15 - Tactical Triad, Michael Moore AR15 Tweet

Review: Digital Storm Bolt II

Digital Storm is a company well known for pushing the limits of high-end desktops. While they have a variety of different models, only two fit into the 'slim' Small Form Factor (SFF) category. Whereas the Eclipse is the entry-level slim SFF PC designed to appeal to those working with smaller budgets, the Bolt is the other offering in the category, and aims much higher than its entry-level counterpart.

I had the opportunity to review the original Bolt when I still wrote for BSN, and I loved it, though there were a few design decisions I wanted to see changed.

Today I'm taking a look at the Digital Storm Bolt II. The successor to the original Bolt, it has undergone a variety of changes when compared to its predecessor. Two of the most common complaints with the original were addressed; the Bolt II now supports liquid cooling for the CPU (including 240mm liquid coolers), and the chassis has been redesigned to have a more traditional two-panel layout (as opposed to the shell design of the original).

Specifications:

Specifications:

The Bolt II comes in four base configurations that can be customized further:

Level 1 (Starting at $1674):

- Base Specs:

- Intel Core i5 4590 CPU

- 8GB 1600MHz Memory

- NVIDIA GTX 760 2GB

- 120GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS H97 Motherboard

- 500W Digital Storm PSU

Level 2 (Starting at $1901):

- Base Specs:

- Intel Core i5 4690K CPU

- 8GB 1600MHz Memory

- NVIDIA GTX 970 4GB

- 120GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS H97 Motherboard

- 500W Digital Storm PSU

Level 3 (Starting at $2569):

- Base Specs:

- Intel Core i7 4790K CPU

- 16GB 1600MHz Memory

- NVIDIA GTX 980 4GB

- 120GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS Z97 Motherboard

- 500W Digital Storm PSU

Battlebox Edition (Starting at $4098):

- Base Specs:

- Intel Core i7 4790K CPU

- 16GB 1600MHz Memory

- NVIDIA GTX TITAN Z 6GB

- 250GB Samsung 840 EVO SSD

- 1TB 7200RPM Storage HDD

- ASUS Z97 Motherboard

- 700W Digital Storm PSU

Note, the system I received for review came out prior to the release of the Nvidia GTX 900 series cards. These are the specifications for that system:

Reviewed System:

- Intel Core i7 4790K CPU (Overclocked to 4.6 GHz)

- 16GB DDR3 1866MHz Corsair Vengeance Pro Memory

- NVIDIA GTX 780Ti

- 500GB Samsung 840 EVO SSD

- 2TB 7200RPM Western Digital Black Edition HDD

- ASUS Z97I-Plus Mini ITX Motherboard

- 500W Digital Storm PSU

- Blu-Ray Player/DVD Burner Slim Slot Loading Edition

This system is pretty impressive considering how small it is. The Bolt II measures 4.4"(W) x 16.4"(H) x 14.1"(L), or for our readers outside of the US, approximately 11cm(W) x 42cm(H) x 36cm(L).

Design and Software

Design and Software

The Bolt II ships with Windows 8.1, but also comes with Steam preinstalled with Big Picture mode enabled, due to the fact that the Bolt II was originally slated to be a hybrid Steam Machine (Windows and Steam OS dual boot). Since Steam Machines had yet to materialize, the Bolt II shipped as a gaming PC designed for the living room, including feet attached to the side panel so the system can sit on its side.

However, this design decision also makes sense of one of my largest annoyances with the computer; the front panel I/O ports are all on the right side of the chassis, near the front, at the bottom. If the system is stood up as one would typically have a desktop, the ports are moderately inconvenient to access. Conversely, if the system is placed on its side in a living room setting (such as under a television), the ports are perfectly accessible.

However, this design decision also makes sense of one of my largest annoyances with the computer; the front panel I/O ports are all on the right side of the chassis, near the front, at the bottom. If the system is stood up as one would typically have a desktop, the ports are moderately inconvenient to access. Conversely, if the system is placed on its side in a living room setting (such as under a television), the ports are perfectly accessible.

Digital Storm mentioned to us that once Steam Machines are ready to roll, they'll be shipping the Bolt II as it was originally intended: as a hybrid Steam Machine. Luckily for us, Steam Machines are coming out this March, so expect to see some hybrid systems from Digital Storm soon.

Digital Storm mentioned to us that once Steam Machines are ready to roll, they'll be shipping the Bolt II as it was originally intended: as a hybrid Steam Machine. Luckily for us, Steam Machines are coming out this March, so expect to see some hybrid systems from Digital Storm soon.

One of the joys of a boutique system is a comforting lack of bloatware, as the Bolt II comes with very little preinstalled. The only software installed on the system was the system drivers, Steam (as previously mentioned), and the Digital Storm Control Center. Here is what the desktop looked like upon initial boot:

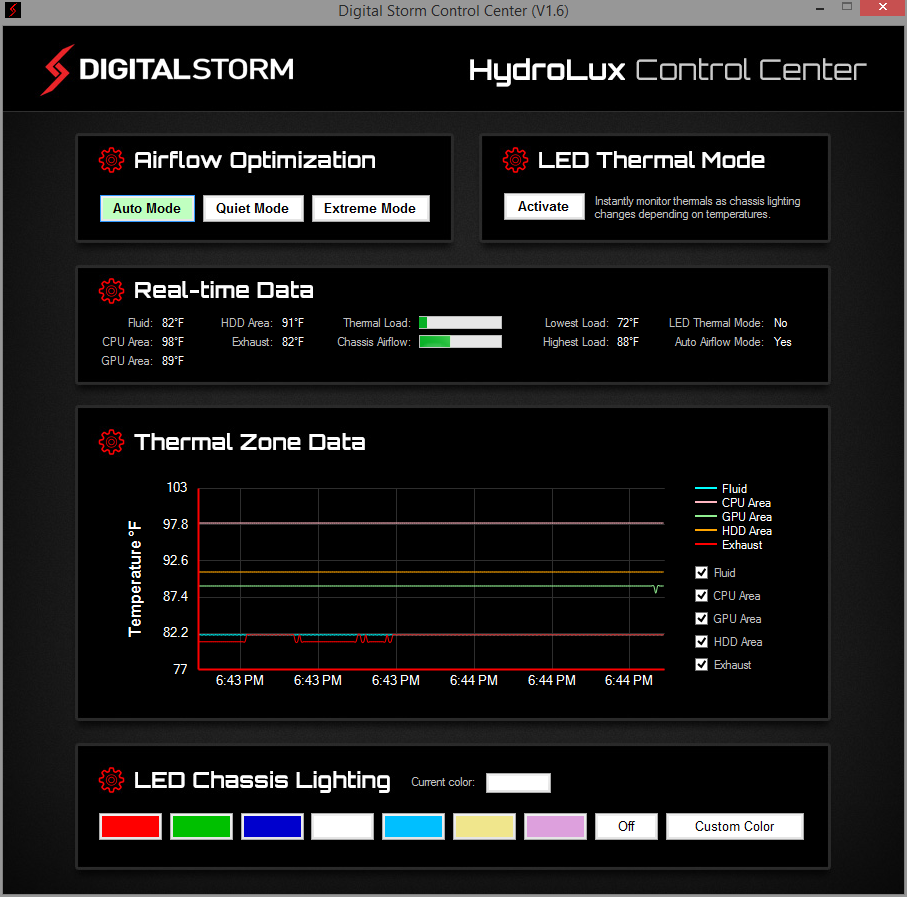

The Digital Storm Control Center is a custom piece of software for Digital Storm PCs designed to manage a system's cooling and lighting and provide metrics for the user. Much to my surprise, the software is designed and coded in-house, which explains the level of polish and quality the program possesses (as opposed to other companies that rely on 3rd party developers located in Asia with software solutions that often prove underwhelming).

The Digital Storm Control Center is a custom piece of software for Digital Storm PCs designed to manage a system's cooling and lighting and provide metrics for the user. Much to my surprise, the software is designed and coded in-house, which explains the level of polish and quality the program possesses (as opposed to other companies that rely on 3rd party developers located in Asia with software solutions that often prove underwhelming).

Synthetic Benchmarks

Synthetic Benchmarks

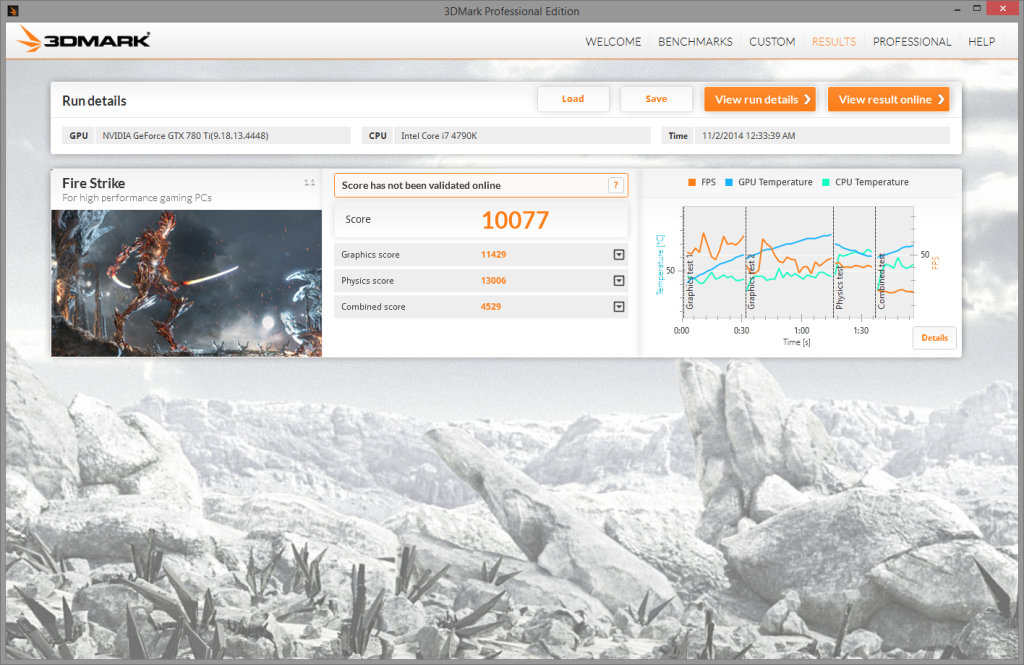

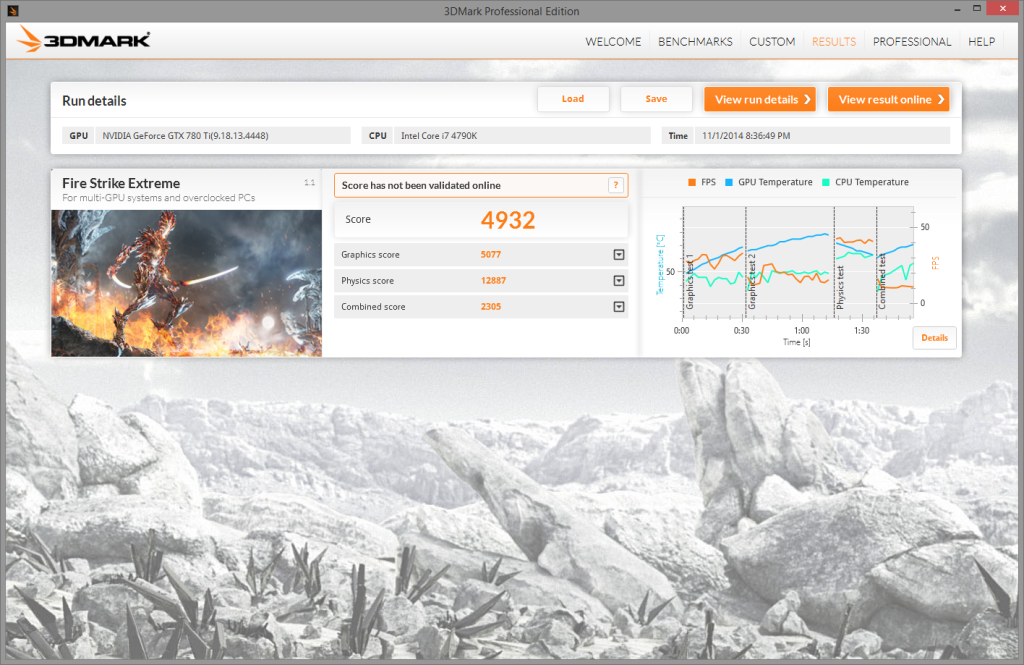

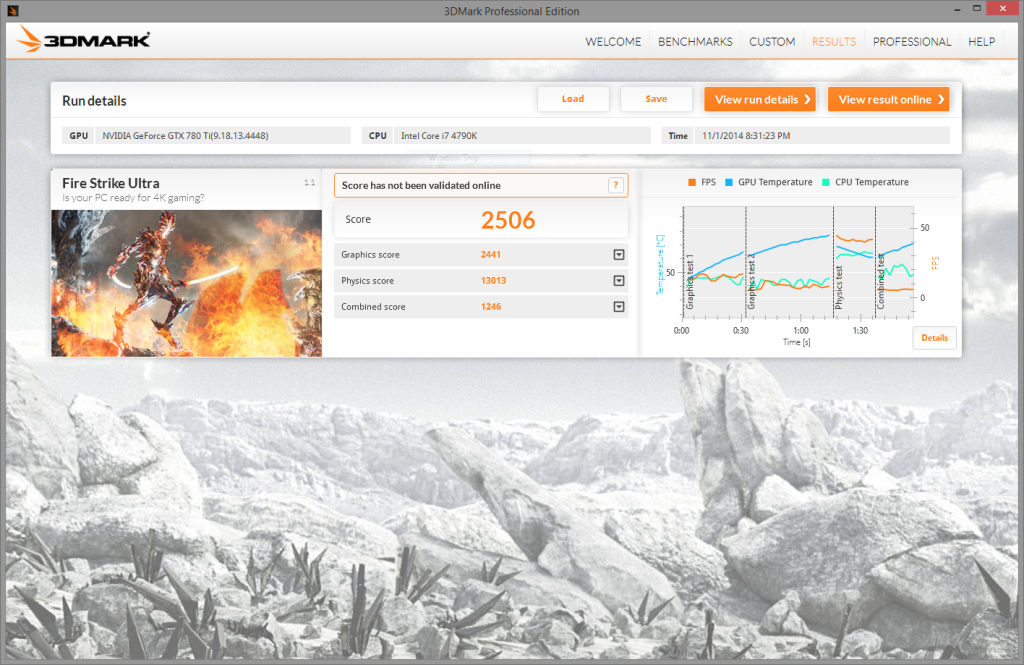

Moving on to benchmarks, I'll start with the synthetics:

Starting with 3DMark's Fire Strike, the Bolt II managed a score of 10077.

Running 3DMark's Fire Strike in Extreme mode, the system scores 4932.

Rounding out 3DMark's Fire Strike is Ultra mode, with the Bolt II getting a score of 2506.

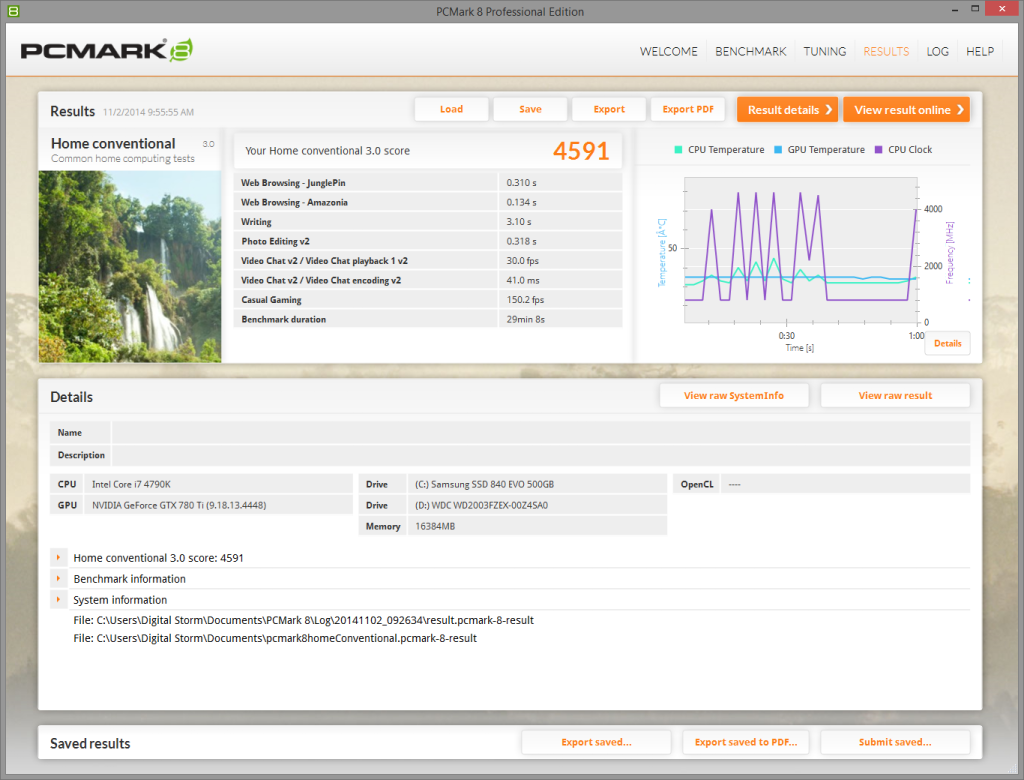

In PCMark 8, the Bolt II scores a 4591 when running the Home benchmark.

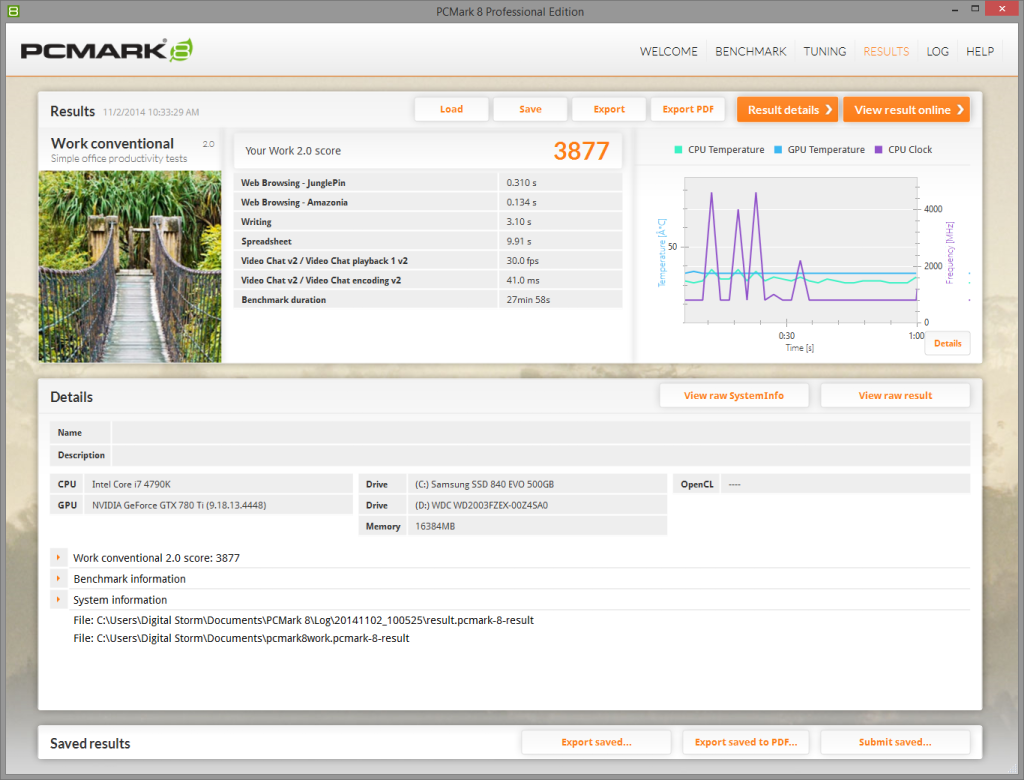

The other benchmark in PCMark 8 I ran was the Work benchmark, which had the Bolt II scoring 3877.

Now to cover AIDA64's sets of benchmarks:

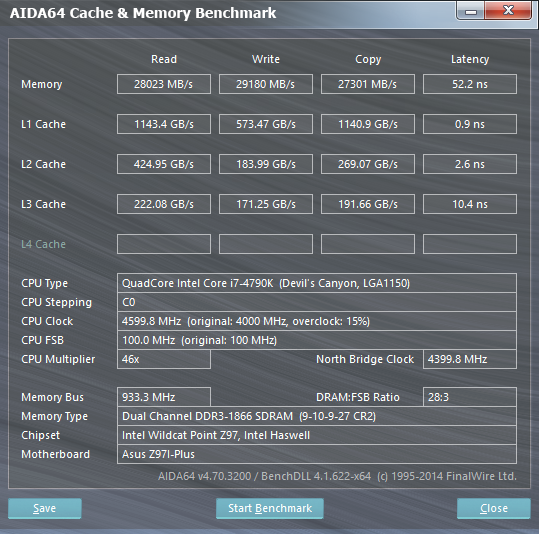

The AIDA64 Cache and Memory benchmark yielded a Read of 28023 MB/s, Write of 29180 MB/s, Copy of 27301 MB/s, and Latency of 52.2 ns on the Memory.

The system got a Read of 1143.4 GB/s, Write of 573.47 GB/s, Copy of 1140.9 GB/s, and Latency of 0.9 ns on the L1 Cache.

It scored a Read of 424.95 GB/s, Write of 183.99 GB/s, Copy of 269.07 GB/s, and Latency of 2.6 ns on the L2 Cache.

Finally, it produced a Read of 222.08 GB/s, Write of 171.25 GB/s, Copy of 191.66 GB/s, and Latency of 10.4 ns on the L3 Cache.

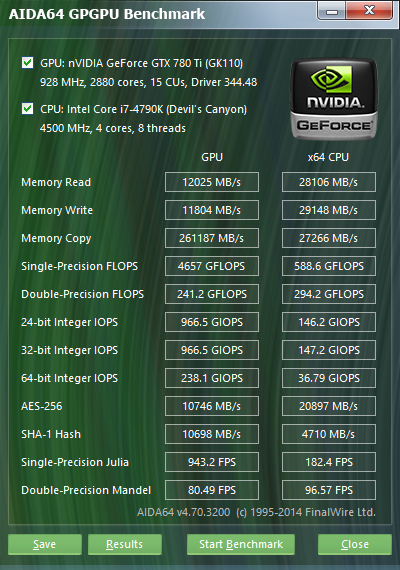

AIDA64's GPGPU Benchmark results are up next:

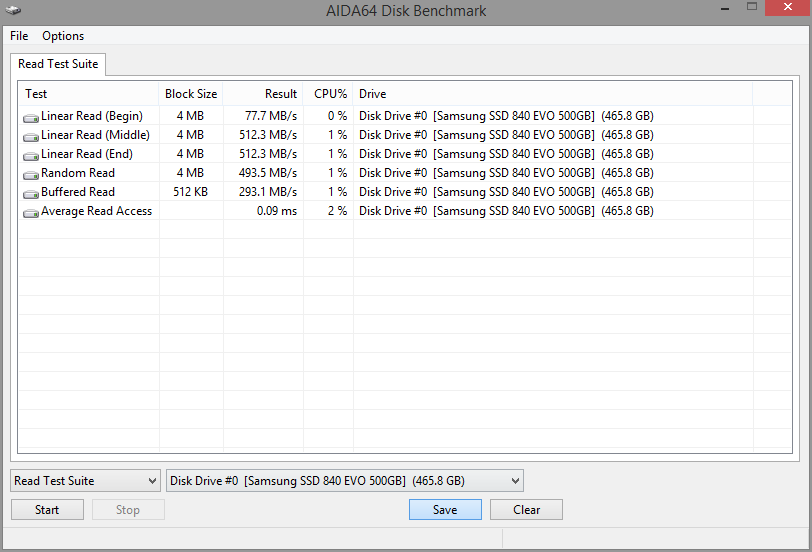

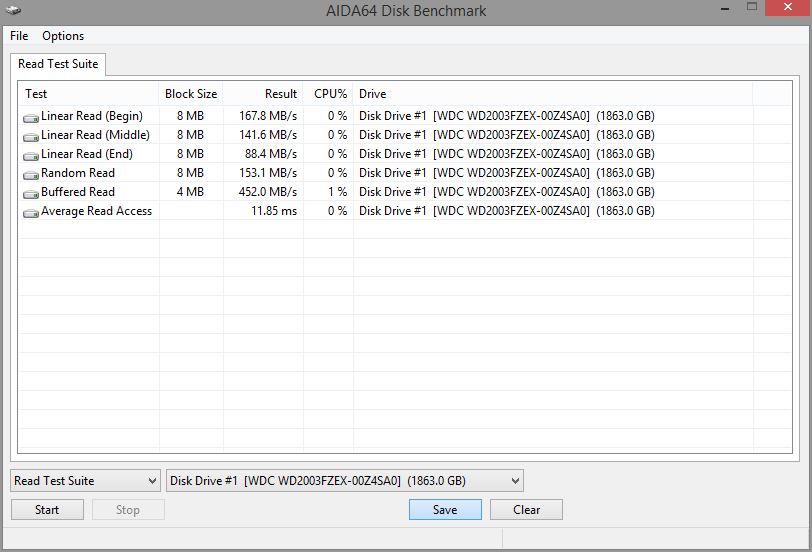

The AIDA64 Disk Benchmark was then run on both the Samsung 840 EVO SSD and the Western Digital 2 TB Black Edition HDD.

The Samsung 840 EVO SSD yielded an average Read of 378 MB/s across 5 different tests, and a latency of 0.09 ms.

The Western Digital 2 TB Black Edition HDD yielded an average Read of 201.5 MB/s across 5 different tests, and a latency of 11.85 ms.

Gaming Benchmarks

Moving on to the results of the gaming benchmarks, now that the synthetic benchmarks have been covered:

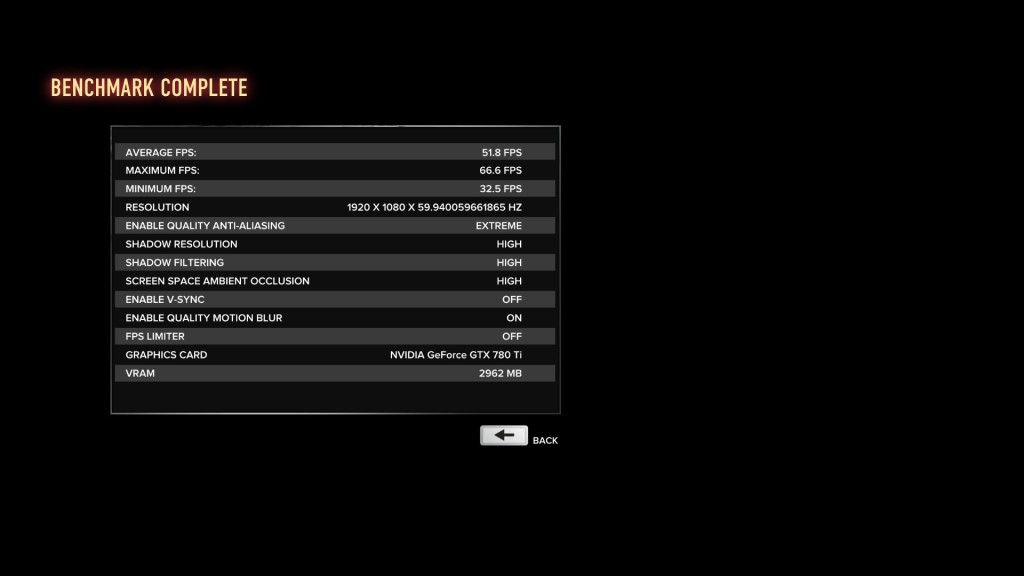

Starting off with Sleeping Dogs: Definitive Edition, the benchmark was run using the settings listed in the screenshot, and it scored an average FPS of 51.8, a maximum FPS of 66.6 FPS, and a minimum FPS of 32.5.

Even when maxing out the game settings at 1080p resolution, the system maintains very playable framerates, which would look even better when paired with a display that utilizes FreeSync or G-Sync. In this case it would have to be G-Sync since this Bolt II configuration utilizes an Nvidia GPU.

Next up is Far Cry 4. I replayed the liberation of Varshakot Fortress in Outpost Master. Rather than use Nvidia's GeForce Experience optimized settings, which should theoretically provide the best overall experience, I cranked the game settings to Ultra at 1920x1080 resolution.

The game was very playable and smooth, getting an average FPS of 82.6, a maximum FPS of 665 FPS, and a minimum FPS of 56.

Moving on, I tested out Assassin's Creed Unity, now that most of the bugs have been sorted out. I ran Unity at the Ultra High setting, 1920x1080 resolution, again ignoring Nvidia’s optimized settings in order to see how the game would run at the top preset. I played through the intro sequence of the game, and excluded cutscenes.

The game was certainly playable, and oftentimes smooth, though there were occasional framerate drops and a tiny bit of noticeable tearing. The game had an average FPS of 38, a maximum FPS of 53 FPS, and a minimum FPS of 19. This would have also gotten some benefit from a FreeSync or G-Sync enabled display.

Value and Conclusion

Clearly the Bolt II is a very capable system, and the fact that it's available in a variety of customizable configurations to better fit the needs and budget of the consumer is a plus (it can be configured as low as $1100, or as high as $7800 excluding any accessories). Though I was impressed with the original Bolt, the Bolt II is a clear improvement in every way.

Whether or not the cost of the Bolt II is a good value is something that has to be determined by the consumer. Would it be cheaper to build yourself a PC with identical performance? Absolutely, even when building with the same or similar components. However, there are a few things that make the Digital Storm Bolt II stand out from a home-built PC.

First is the form factor. The Bolt II uses a fully custom chassis designed by Digital Storm, so no one building a PC at home can build into a chassis with the same dimensions. Most mini-ITX systems won't be anywhere near as slim.

Second is the warranty and guarantees of build-quality and performance of the system, which you can only get by purchasing a PC from a reputable company with a solid customer service record.

Third is the convenience. The Bolt II comes prebuilt, with the OS and drivers installed, and can also include a stable overclock verified by Digital Storm, custom lighting and cooling, as well as cable management. All of these factors sure makes building your own seem like more pain than it’s worth.

When compared to other system builders with similar SFF systems, the Bolt II still comes out number one in terms of fit and finish, as well as build quality. The metal chassis and custom design are really impressive, and serve to differentiate the Bolt II from other systems in a way that the Digital Storm Eclipse doesn't (though that is the budget model of course).

If you were to ask me if I would build my own PC or purchase a Bolt II, my answer would probably still be to build my own system. However, this is simply due to personal preference; the form factor is not all that necessary for my current lifestyle, and I value the flexibility of choosing my own components and saving money more than the hassle of putting together the system myself. Despite all of this, were I to need a prebuilt PC with a small footprint, there's no doubt the Bolt II would be my first choice.

G-Sync vs FreeSync: The Future of Monitors

For those of you interested in monitors, PC gaming, or watching movies/shows on your PC, there are a couple recent technologies you should be familiar with: Nvidia’s G-Sync and AMD’s FreeSync.

Basics of a display

Most modern monitors run at 30 Hz, 60 Hz, 120 Hz, or 144 Hz depending on the display. The most common frequency is 60 Hz (meaning the monitor refreshes the image on the display 60 times per second). This is due to the fact that old televisions were designed to run at 60 Hz since 60 Hz was the frequency of the alternating current for power in the US. Matching the refresh rate to the power helped prevent rolling bars on the screen (known as intermodulation).

What the eye can perceive

It is a common myth that 60 Hz was chosen because that’s the limit of what the human eye can detect. In truth, when monitors for PCs were created, they made them 60 Hz, just like televisions; mostly because there was no reason to change it. The human brain and eye can interpret images of up to approximately 1000 images per second, and most people can identify a framerate reliably up to approximately 150 FPS (frames per second). Movies tend to run at 24 FPS (the first movie to be filmed at 48 FPS was The Hobbit: An Unexpected Journey, which got mixed reactions by audiences, some of whom felt the high framerate made the movie appear too lifelike).

The important thing to note is that while humans can detect framerates much higher than what monitors can display, there are diminishing returns on increasing the framerate. For video to look smooth, it really only needs to run at a consistent 24+ FPS, however, the higher the framerate, the clearer the movements onscreen. This is why gamers prefer games to run at 60 FPS or higher, which provides a very noticeable difference when compared to games running at 30 FPS (which was a standard for the previous generation of console games).

How graphics cards and monitors interact

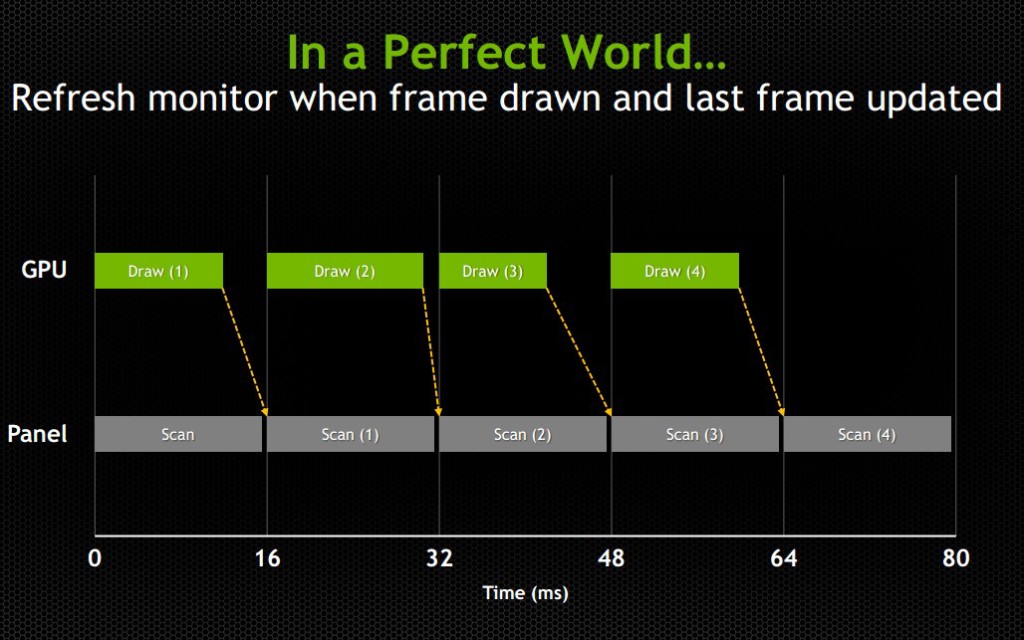

An issue with most games is that while a display will refresh 60 times per second (60 Hz) or 120 or 144 or whatever the display can handle, the images being sent from the graphics card (GPU) to the display will not necessarily come at the same rate or frequency. Even if the GPU sends 60 new frames in 1 second, and the display refreshes 60 times in that second, it’s possible that the time variance between each frame rendered by the GPU will be wildly different.

Explaining frame stutter

This is important, because while the display will refresh the image every 1/60th of a second, the time delay between one frame and another being rendered by the GPU can and will vary.

Consider the following example: The running framerate of a game is 60 FPS, considered a good number. However, the time delay between half of the frames is 1/30th of a second and the other half has a delay of 1/120th of a second. In the case of it being 1/30th of a second, the monitor will have refreshed twice in that amount of time, having shown the previous image twice prior to receiving the new one. In the case of it being 1/120th of a second, the second image will have been rendered by the GPU prior to the display refreshing, so that image will never even make it to the screen. This introduces an issue known as micro-stuttering and is clearly visible to the eye.

This means that even though the game is being rendered at 60 frames per second, the frame rendering time varies and ends up not looking smooth. Micro-stuttering can also be caused by systems that utilize multiple GPUs. When frames rendered by each GPU don’t match up in regards to timing, it causes delays as described above, which produces a micro-stuttering effect as well.

Explaining frame lag

An even more common issue is that framerates rendered by a GPU can drop below 30 FPS when something is computationally complex to render, making whatever is being rendered look like a slideshow. Since not every frame is equally simple or complex to render, framerates will vary based on the frame. This means that even if a game is getting an average framerate of 30 FPS, it could be getting 40 FPS for half the time you’re playing and 20 FPS for the other half. This is similar to the frame time variance discussed in the paragraph above, but rather than appear as micro-stutter, it will make half of your playing time miserable, since people enjoy video games as opposed to slideshow games.

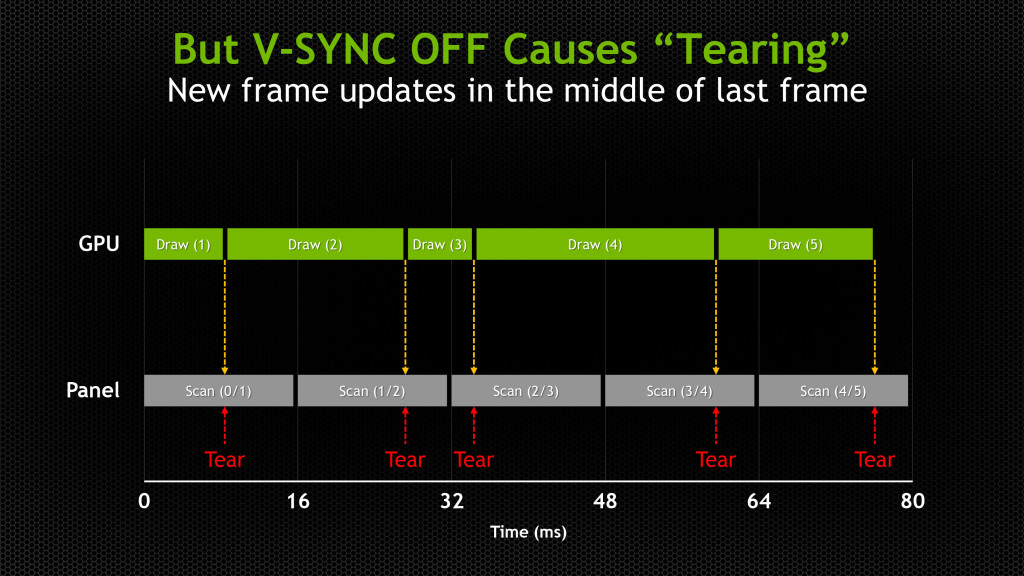

Explaining tearing

Probably the most pervasive issue though is screen tearing. Screen tearing occurs when the GPU is partway through rendering another frame, but the display goes ahead and refreshes anyways. This makes it so that part of the screen is showing the previous frame, and part of it is showing the next frame, usually split horizontally across the screen.

These issues have been recognized by the PC gaming industry for a while, so unsurprisingly some solutions have been attempted. The most well-known and possibly oldest solution is called V-Sync (Vertical Sync), which was designed mostly to deal with screen tearing.

V-Sync

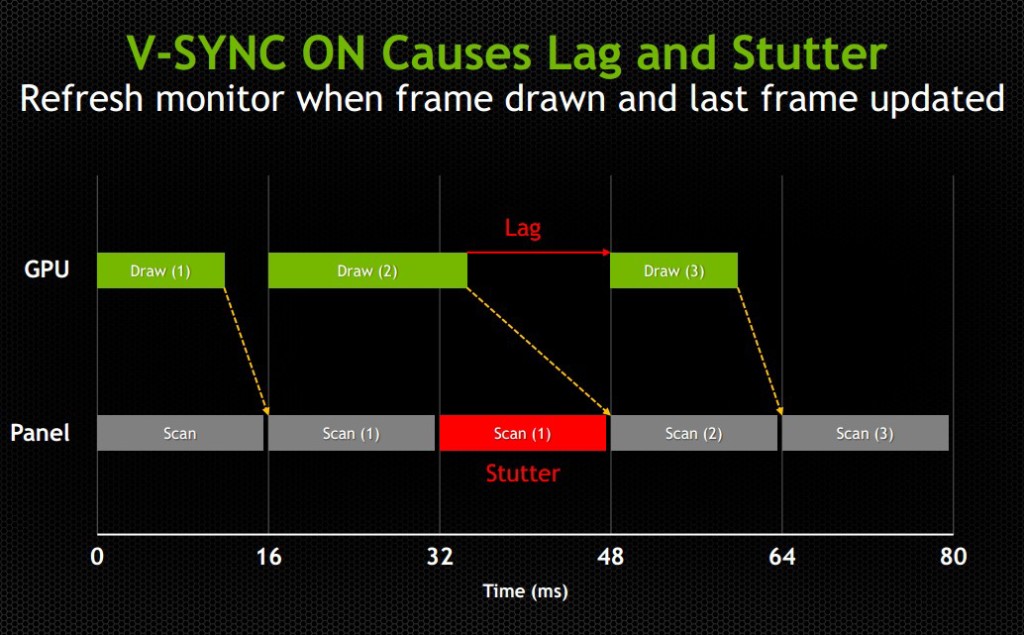

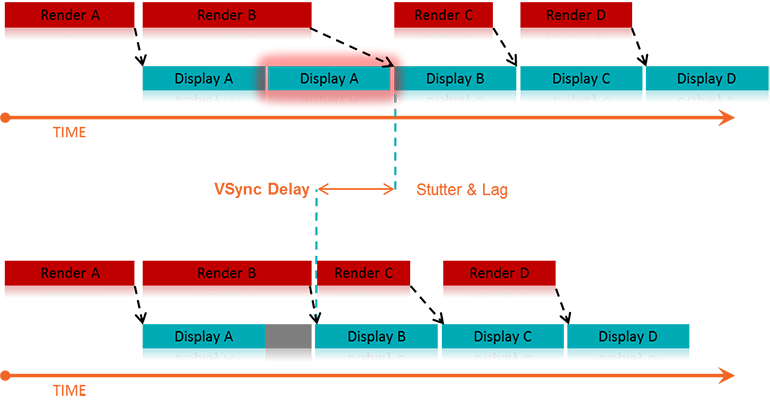

The premise of V-Sync is simple and intuitive – since screen tearing is caused by a GPU rendering frames out of time with the display, it could be solved by syncing the rendering/refresh time of the two. Displays run at 60 Hz, so when V-Sync is enabled, the GPU will only output 60 FPS, designed to match the display.

However, the problem here should be obvious, as it was discussed earlier in this article: just because you tell the GPU to render a new frame at a certain time, it doesn’t mean it will have it fresh out of the oven for you in time. This means that the GPU is struggling to match pace with the display, but in some cases it will easily match (such as in cases where it would normally render over 60 FPS), whereas in others it can’t keep up (such as in cases where it would normally render under 60 FPS).

While V-Sync fixes the issue of screen tearing, it adds a new problem: any framerate under 60 FPS means that you’ll be dealing with stuttering and lag onscreen, since the GPU will be choking on trying to match render time with the display’s refresh time. Without V-Sync on, 40-50 FPS is a perfectly reasonable and playable framerate, even if you experience tearing. With V-Sync on, 40-50 FPS is a laggy unplayable mess.

For some odd reason, while the idea to match the GPU render rate to the display’s refresh rate has been around since V-Sync was introduced years ago, no one thought to try the other way around until last year: matching the display’s refresh rate to the GPU’s render rate. Surely it seems like an obvious solution, but I suppose the idea that displays had to render at 60, 120, or 144 Hz was taken as a given.

The idea seems much simpler and straightforward. Matching a GPU’s render rate to a display’s refresh rate requires the computational horsepower on the GPU to keep pace with a display that does not care about how complex a frame is to render, and just chugs on at 60+ Hz. Matching a display’s refresh rate to a GPU’s render rate only requires the display to respond to a signal sent from the GPU to refresh when a new frame is sent.

This means that any framerate above 30 FPS should be extremely playable, resolving any tearing issues found when playing without V-Sync, and resolving any stuttering or lag issues caused by having lower than 60 FPS when playing with V-Sync. Suddenly the range of 30-60 FPS becomes much more playable, and in general gameplay and on screen rendering becomes much smoother

The modern solution

This solution comes in two forms: G-Sync – a proprietary design created by Nvidia; and FreeSync – an open standard designed by AMD.

G-Sync

G-Sync was introduced towards the end of 2013, as an add-on module for monitors (first available in early 2014). The G-Sync module is a board made using a proprietary design, which replaces the scaler in the display. However, the G-Sync module doesn’t actually implement a hardware scaler, leaving that to the GPU instead. Without getting into too much detail about how the hardware physically works, suffice it to say the G-Sync board is placed along the chain in a spot where it can determine when/how often the monitor draws the next frame. We’ll get a bit more in depth about it later on in this article

The problem with this solution is that this either requires the display manufacturer to build their monitors with the G-Sync module embedded, or requires the end user to purchase a DIY kit and install it themselves in a compatible display. In both cases, extra cost is added due to the necessary purchase of proprietary hardware. While it is effective, and certainly helps Nvidia’s bottom line, it dramatically increases the cost of the monitor. Another note of importance: G-Sync will only function on PCs equipped with Nvidia GPUs newer/higher end than the GTX 650 Ti. This means that anyone running AMD or Intel (integrated) graphics cards is out of luck.

FreeSync

FreeSync on the other hand was introduced by AMD at the beginning of 2014, and while Adaptive-Sync (will be explained momentarily) enabled monitors have been announced, they have yet to hit the market as of the time of this writing (January 2015). FreeSync is designed as an open standard, and in April of 2014, VESA (the Video Electronics Standards Association) adopted Adaptive-Sync as part of its specification for DisplayPort 1.2a.

Adaptive-Sync is a required component of AMD’s FreeSync, which allows the monitor to vary its refresh rate based on input from the GPU. DisplayPort is a universal and open standard, supported by every modern graphics card and most modern monitors. However, it should be noted that while Adaptive-Sync is considered part of the official VESA specification for DisplayPort 1.2a and 1.3, it is important to remember that it is optional. This is important because it means that not all new displays utilizing DisplayPort 1.3 will necessarily support Adaptive-Sync. We very much hope they do, as a universal standard would be great, but including Adaptive-Sync introduces some extra cost in building the display mostly in the way of validating and testing a display’s response.

To clarify the differences, Adaptive-Sync refers to the DisplayPort feature that allows for variable monitor refresh rates, and FreeSync refers to AMD’s implementation of the technology which leverages Adaptive-Sync in order to display frames as they are rendered by the GPU.

AMD touts the following as the primary reasons why FreeSync is better than G-Sync: “no licensing fees for adoption, no expensive or proprietary hardware modules, and no communication overhead.”

The first two are obvious, as they refer to the primary downsides of G-Sync – proprietary design requiring licensing fees, and proprietary hardware models that require purchase and installation. The third reason is a little more complex.

Differences in communication protocols

To understand that third reason, we need to discuss how Nvidia’s G-Sync model interacts with the display. We’ll provide a relatively high level overview of how that system works and compare it to FreeSync’s implementation.

The G-Sync module modifies the VBLANK interval. The VBLANK (vertical blanking interval) is the time between the display drawing the end of the final line of a frame and the start of the first line of a new frame. During this period the display continues showing the previous frame until the interval ends and it begins drawing the new one. Normally, the scaler or some other component in that spot along the chain determines the VBLANK interval, but the G-Sync module takes over that spot. While LCD panels don’t actually need a VBLANK (CRTs did), they still have one in order to be compatible with current operating systems which are designed to expect a refresh interval.

The G-Sync module modifies the timing of the VBLANK in order to hold the current image until the new one has been rendered by the GPU. The downside of this is that the system needs to poll repeatedly in order to check if the display is in the middle of a scan. In the case that it is, the system will wait until the display has finished its scan and will then have it render a new frame. This means that there is a measurable (if small) negative impact on performance. Those of you looking for more technical information on how G-Sync works can find it here.

FreeSync, as AMD pointed out, does not utilize any polling system. The DisplayPort Adaptive-Sync protocols allow the system to send the signal to refresh at any time, meaning there is no need to check if the display is mid-scan. The nice thing about this is that there’s no performance overhead and the process is simpler and more streamlined.

One thing to keep in mind about FreeSync is that, like Nvidia’s system, it is limited to newer GPUs. In AMD’s case, only GPUs from the Radeon HD 7000 line and newer will have FreeSync support.

(Author's Edit: To clarify, Radeon HD 7000 GPUs and up as well as Kaveri, Kabini, Temash, Beema, and Mullins APUs will have FreeSync support for video playback and power-saving scenarios [such as lower framerates for static images].

However, only the Radeon R7 260, R7 260X, R9 285, R9 290, R9 290X, and R9 295X2 will support FreeSync's dynamic refresh rates during gaming.)

Based on these things, one might come to the immediate conclusion that FreeSync is the better choice, and I would agree with you, though there are some pros and cons of both to consider (I’ll leave out the obvious benefits that both systems already share, such as smoother gameplay).

Pros and Cons

Nvidia G-Sync

Pros:

- Already available on a variety of monitors

- Great implementation on modern Nvidia GPUs

Cons:

- Requires proprietary hardware and licensing fees, and is therefore more expensive (the DIY kit costs $200, though it’s probably a lower cost to display OEMs)

- Proprietary design forces users to only use systems equipped with Nvidia GPUs

- Two-way handshake for polling has a negative impact on performance

AMD FreeSync

Pros:

- Uses Adaptive-Sync which is already part of the VESA standard for DisplayPort 1.2a and 1.3

- Open standard means no extra licensing fees, so the only extra costs for implementation are compatibility/performance testing (AMD stated that FreeSync enabled monitors would cost $100 less than their G-Sync counterparts)

- It can send a refresh signal at any time, there’s no communication overhead since there’s no need for a polling system

Cons:

- While G-Sync monitors have been available for purchase since early 2014, there won’t be any FreeSync enabled monitors until March 2015

- Despite it being an open standard, neither Intel nor Nvidia has even announced support to make use of the system (or even Adaptive-Sync for that matter), meaning for now it’s an AMD-only affair

Final thoughts

My suspicion is that FreeSync will eventually win out, despite the fact that there’s already some market share for G-Sync. The reason for this is that Nvidia does not account for 100% of the GPU market, and G-Sync is limited only to Nvidia systems. Despite reluctance from Nvidia, it will likely eventually support Adaptive-Sync and will release drivers that will make use of it. This is especially true because displays with Adaptive-Sync will be significantly more affordable than G-Sync enabled monitors, and will perform the same or better.

Eventually Nvidia will lose this battle, and when they finally give in, that will be when consumers benefit the most. Until then, if you have an Nvidia GPU and want smooth gameplay now, there are plenty of G-Sync enabled monitors on the market now. If you have an AMD GPU, you’ll see your options start to open up within the next couple of months. In any case, both systems provide a tangible improvement for gaming and really any task that utilizes real-time rendering, so either way in the battle between G-Sync vs FreeSync, you win.